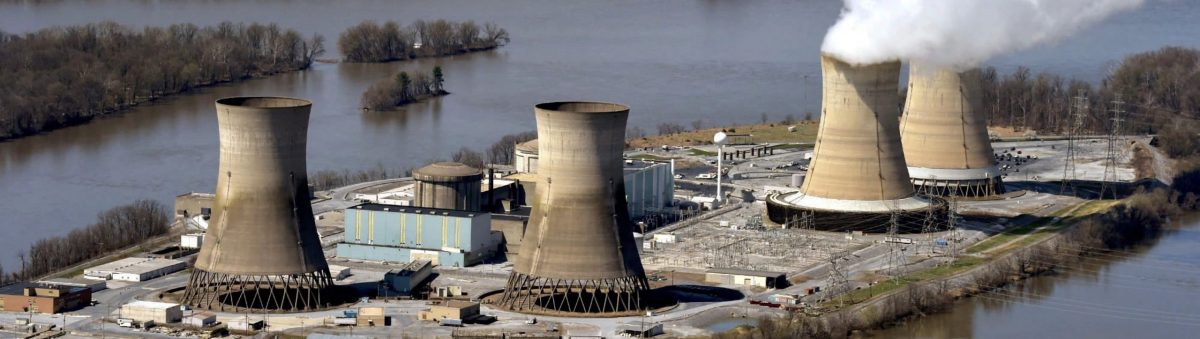

Despite initial fears to the contrary, the accident at Three Mile Island did not result in any significant damage to local residents’ health or property. The partial meltdown was successfully managed, and the unaffected reactor, TMI-1, continued in operation for years after the crisis. A major humanitarian crisis had been averted. Where, then, is the nuclear disaster?

Three Mile Island was a significant event not for the accident itself, but because of the way that humans responded to it. The media’s portrayal of the disaster, for example, stoked public fear toward nuclear energy, even as government actors took steps to reassure Americans by implementing sweeping policy changes. The effects of the accident remain baked into American culture today, as the debate over nuclear power’s efficacy continues and government bureaucracy has assumed an increasingly active regulatory role in the industry. In short, the TMI crisis was disastrous in the sense that it was conceived that way by the human actors responding to it. And as with many historical disasters, the contours of crisis are as much drawn by the human response as the inciting event itself.

Was TMI an Exception?

In the wake of the TMI accident, sociologist Charles Perrow developed his Normal Accident Theory, which holds that in tightly coupled complex systems – that is, systems whose multiple components change rapidly – accidents become inevitable over a long enough period of time, despite their short-term implausibility (Scharre 2016, 25).

The nuclear reactor at TMI-2 typifies the sort of complex system that is prone to disaster, per Perrow’s theory. Features of such systems include:

-

-

- Many possible causes of failure. At Three Mile Island, both human and mechanical errors contributed to the reactor’s partial meltdown. Operators might have left the cooling system on instead of uncovering the reactor core, which would have lessened the damage. But several key indicators, however, gave false information in the early stages of the malfunction, which led the operators to take erroneous action in full confidence the were responding correctly (Scharre 2016, 27).

-

- Unanticipated interactions between system elements. The plant designers didn’t anticipate, for example, that the air conditioning units at TMI might serve as a channel to expel radioactive steam (NYT 1979). The unforeseen failures in minor plant equipment, and their collective outsize effect on the TMI accident, was a key finding of the Kemeny Commission also noted this (Commission, 9).

- Complexity of the system. Nuclear reactors are highly technical and complex, as evidenced by the media’s persistent struggle to understand the full scope of the disaster in the days following.

- Tight coupling between system elements. Many aspects of TMI-2’s operation were closely linked, such as the direct increases in heat and pressure resulting from the decrease in water level in the reactor’s core. In the initial phase of the disaster, over 100 warning signals went off in the control room at the same time, making it impossible to respond to the disaster in real time (Commission, 11).

-

Many of the individual failures in the TMI-2 system were simple and might have been adequately managed in isolation. The interaction of so many of these minor failures, however, all of which responded to each other in unpredictable and nonlinear ways, ultimately led to a serious disaster that was nearly impossible to avoid (Scharre 2016, 27). The TMI reactor reflects a larger truth about the systems humans build. Sometimes, the sheer complexity of these structures make accidents all too plausible, suggesting that man-made disasters may not always be the exceptional circumstance humans would like to believe they are.

Technological Determinism and Spoiled Expectations

As with other industrial and man-made accidents, Three Mile Island was marked by a general attitude of hubris prior to the event. The NRC’s Rasmussen Report, for example, promised that nuclear energy was perfectly safe and consequence-free; multiple energy companies in the industry likewise attempted to persuade the public of the unparalleled safety of nuclear energy. As the Kemeny Commission noted, a persistent assumption lingered in the years prior to the accident that nuclear power plants could be made sufficiently safe so as to be “people-proof” (Commission 20).

And Americans bought it. As local grandmother Carol Pfeiffer explained after the crisis. “I don’t think hardly anybody in town realized the danger before the accident. We were just plain stupid about it. We didn’t understand. We were so pleased about the prosperity it brought to the town” (Zaretsky 2018, 61). Even the officials who were supposed to know better did not grasp the gravity of the risk, with the NRC’s director of nuclear reactor regulation, Harold Denton, later recalling that “within the NRC, no one really thought you could have a core meltdown. It was more a Titanic sort of mentality. This plant was so well designed that you couldn’t possibly have serious core damage” (Ibid.).

America’s generally relaxed attitude toward nuclear power reflected an attitude that scholar Nicole Fleetwood would later call technological determinism. This philosophy is marked by a central belief that technology is the prevailing force “behind progress and social development,” and Americans of all stripes exhibited their sympathy to this idea prior to TMI (Fleetwood 2006, 768). Middletown residents had faith that the nuclear reactor would forestall the region’s economic decline, bringing new jobs and prosperity to the area (Zaretsky 2018, 61). Nuclear power industry executives believed that their technology was error-proof, and would prove a key component of American infrastructure in years to come. Even President Carter never strayed from his firm belief in the efficacy of nuclear power as a tool to wean the United States from its dependence on oil.

Writing about the Fukushima nuclear disaster years later, Harry Bernas might have a succinct way to describe the pervasive attitudes of Americans prior to TMI: System 1 thinking. In this pattern, Bernas writes, humans tend to overlook their own technological shortcomings in favor of the immediate benefits such developments promised. Bernas’s stern warning in Fukushima’s aftermath that humans need to shift to System 2 thinking, marked by “new, adaptive patterns” to account for potential disaster, shows that the lessons of TMI continue to be relearned with each nuclear event (Bernas 2019, 1372). Indeed, as humanity prepares for some of its greatest challenges in the 21st century – COVID relief, rectifying slow violence around the world, and climate change mitigation among them – the disaster at Three Mile Island is as relevant as ever. Popular media threatens disinformation and sensationalism, and hubristic faith in human progress promises to face yet another reckoning.